Motion-Capture 3D Sign Language Resources

Project Abstract

The project expands the portfolio of language resources available in ELG by adding adata-set and a search tool that are for Czech sign language. The project solves the current problem which is a critical lack of quality data necessary for research and

subsequent deployment of machine learning techniques in this area. As an output, the project currently provides the largest collection of sign language acquired by state-of-the-art 3D human body recording technology and enables the future successful deployment of communication technologies, especially machine translation and sign language synthesis.

Project type

a) contributes resources, services, tools or data sets to ELG

Main goal

The main goal of the project is to extend the portfolio of language resources available in the ELG by adding datasets and tools for sign language (SL). The SL resources are scarce - they consist of small SL corpora usually designed for a specific domain such as linguistics or computer science. The situation is even worse for “small” languages such as Czech SL (CSE) which is the focus of our project.

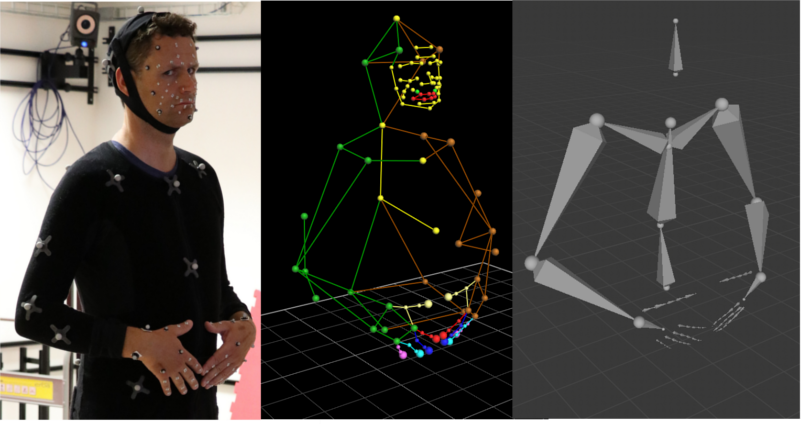

We want to create large data-set of high precision SL motion data. Current SL data-sets are recorded mostly by video camera. The motion capture technology guarantes more precise recording of the signer’s movements directly in 3D space.

We have already recorded small data-set as a proof of intended concept. This data-set was made for CSE, covering the domain of weather forecast and has a rather limited size and contains only one signer only [1].

The proposed project aims to acquire further motion capture data to cover wider domain, grammatical context and more signers. We plan to record new data, make proper data post-processing, annotate glosses and develop tools for data extraction from the developed data-set.

Project OUTPUT:

Motion capture data-set for CSE consisting of continuous signing and vocabulary, download.

Tools for data-set usage enabling search for single glosses, phrases or small motion sub-units (for example given hand shape/ action

Team focus:

- Miloš Železny, Ph.D.: Research AssociateProfessor at the Department of Cybernetics(DC):project leader - Machine learning (ML), Artificial intelligence (AI), Computer vision(CV) and Human-machine interaction;

- Zdeněk Krňoul, Ph.D. Assistant Professor atthe DC:3D scanning, ML and AI;

- Pavel Jedlička, MSc: Ph.D. candidate at UWB:3D scanning and motion capture analysis;

- Luděk Muller, Professor, Ph.D.: Head of AIgroup at DC, co-author of speech and SLcorpora (LDC, ELRA) - ML and AI.

Project RUN overview:

- Chosen domain (topic limited);

- Multiple native signers (at least 3);

- 3D motion capture recording andpost-processing;

Recent projects:

- [1] Jedlička, et al.:Sign Language MotionCapture Dataset for Data-driven Synthesis,LREC 2020.

- [2] Jedlička, et al.:Data Acquisition process formusculoskeletal model of human shoulder,WCB 2018.

- [3] Železny, et al.:Use of motion capture andeye tracking in scientific tasks, Digitalna obradagovora i slike DOGS 2017, Novi Sad, Novembar2017,65-68.

Lab devices:

- VICON MoCap system 8xT20 (high precision,high FPS);

- 2x Kinect v2;

- Cybergloves3,

- Vicon Cara.

ZČU

ZČU